Task: Use a pre-trained face descriptor model to output a single continuous variable predicting an outcome using Caffe’s CNN implementation.

Picture Reference: https://deeplearning4j.org/linear-regression

So, we’re going to take VGG-Face (a model that is pre-trained on Facial Images) and train this model to predict the salary of the person. This sounds daft! How can anyone predict a person’s salary simply by looking at a person’s face? You and I cannot do this. But the VGG-16 model has 138 million parameters (weights and biases) that it learns. Each of this weight corresponds to a single node which may refer to for example: the skin tone of a person or the shape of the jaw bone of a person or even features that are meaningless or perhaps unpredictable for us.

The model’s architecture is based on the VGG-Very-Deep-16 CNN, it is pre-trained on an artificial dataset of 2.6 Million Images generated by the VGG group and evaluated on the Labeled Faces in the Wild and Youtube Faces dataset. (More description in the paper: Deep Face Recognition)

The caffe package of the VGG-Face model can be downloaded from here. It contains two files: VGG_Face.caffemodel and VGG_FACE_deploy.prototxt.

- The caffemodel stores the weights and biases given to each node in the model’s architecture.

- The prototxt file defines the architecture of the model. The architecture refers to the various types of “layers”, number of inputs, outputs and various parameters of the layers. There are different types of layers such as convolutional layers, pooling layers, local response normalization layer, ReLU, loss layers to compute specific loss functions etc. These layers are connected to each other in a specific order. The data that is passed in between these layers are called “blobs”. A layer takes a blob as an input, performs the function on it and outputs a resultant blob. The output blob may have different shape as compared to the input blob due to the operations performed on it.

Now, the VGG Face model has been trained to classify the image of a face and recognize which person it is. The final classification layer has been discarded. We want to tweak the architecture of the model to produce a single output. This requires a number of changes in the prototxt file.

Further, the caffe package does not contain a prototxt file for training or validation which means that I shall have to make my own. It only contains a VGG_deploy.prototxt which is the layer architecture used for testing the model.

Steps for Fine-Tuning:

- Ignore the Input dims in the deploy prototxt and copy the layer information to another file named “train_val.prototxt” Check the number of output dimensions in the last fully connected layer. I have num_output: 2622. I can change this to 1, however, these 2622 dimensions are face descriptors used for classifying the image. They may contain important information. Instead, I chose to add a new layer with num_output: 1.

In the VGG_DEPLOY.prototxt, add:

layer {

name: "fc9_elq"

bottom: "fc8"

top: "fc9_elq"

type: "InnerProduct"

inner_product_param {

num_output: 1

}

}

**Note: The name refers to the name of the blob that it shall get an input from and the name of the blob that it shall produce.

I created a new layer which is Fully Connected (therefore the type “InnerProduct”) with number of output: 1. If you have more than one scores to be predicted, you can change this number accordingly. The bottom parameter refers to the blob that is being passed to this layer from the previous layer. This would be specified in the top parameter of the previous layer. The input is therefore, a blob named “fc8” and the output is a blob which I have named “fc9_elq”.

- In the VGG_train_val.prototxt, add:

layer {

name: "fc9_elq"

bottom: "fc8"

top: "fc9_elq"

type: "InnerProduct"

param {

lr_mult: 10

decay_mult: 1

}

param {

lr_mult: 20

decay_mult: 0

}

inner_product_param {

num_output: 1

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

I am randomly initializing this layer with a gaussian mean of 0 and standard deviation of 0.01. Note that it might be more intuitive to simply initialize all the weights to 0, but this would be a mistake. If all the neurons in the network compute the same output, they will also compute the same gradients in backpropagation and then undergo the exact same parameter updates. This is undesirable. A source of asymmetry is thereby required and the weights should not be initialized the same. The node weights are now initialized from a gaussian distribution so that they point in random directions in the input space. You could try doing the same with a uniform distribution although the impact on the final result should be minimal. If the number of nodes in a randomly initialized layer are too large then the variance would grow with the number of inputs. There are methods to calibrate and overcome this, however, in my case, I only have one output node to worry about.

I want this layer to learn faster than all the other layers since the other layers have been pre-trained on the VGG dataset. The lr_mult is useful for this purpose since its value gets multiplied by the base_lr (as defined in the solver file) and affects the learning rate for the layer (new_lr = lr_mult*base_lr). The decay_mult refers to the same for the weight decay. The weight decay (as defined in the solver) is the regularization parameter which penalizes large weights. (This is explained well in the Fast-RCNN paper: https://arxiv.org/pdf/1504.08083.pdf) After a fixed number of iterations (mentioned in the solver) , the lr_mult and decay_mult params will increase value to 20 and 0 respectively. At this point, large weights shall not be penalized and this may result in overfitting.

Note- In case you change the number of outputs of an existing FC layer, and initialize it with random weights; these random weights will be ignored unless you change the name of the layer. The weights would otherwise be inherited from the pre-trained caffemodel.

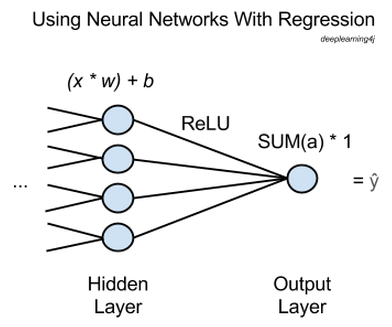

- Add a loss layer in the train_val.prototxt file for both phases: train and test. Since the task is regression, I would prefer RMSE as the loss function which is used to update the values of weights and biases in the network. However, caffe does not provide a RMSE loss function layer. Instead, I used the EuclideanLoss layer.

If you changed the number of outputs in the last layer, then delete the ReLU layer that comes immediately before the changed final layer. Since the ReLU squashes the outputs of the FC layer between 0-1 which is not required in regression. We would prefer dealing with the absolute values.

layer {

name: "loss"

type: "EuclideanLoss"

bottom: "fc9_elq"

bottom: "label"

top: "loss"

}

layer {

name: "losstest"

type: "EuclideanLoss"

bottom: "fc9_elq"

bottom: "label"

top: "losstest"

include: { phase: TEST }

}

- Finally, the data layer.

The input to all networks is a face image of size 224×224 with the average face image (computed from the training set) subtracted – this is critical for the stability of the optimisation algorithm.